Why -- Let's assume that because of some business requirement change, one of the column of your source definition is no longer exists or deleted from database. It's an obvious impact to your workflows; wherever that particular source is getting used. If you run the workflow, it would fail as the particular column doesn't exists.

How -- Then the next questions comes is, how can we find all Workflows / Mappings etc objects which are dependent on particular source? Informatica by itself, provide you the facility to find out the dependencies.

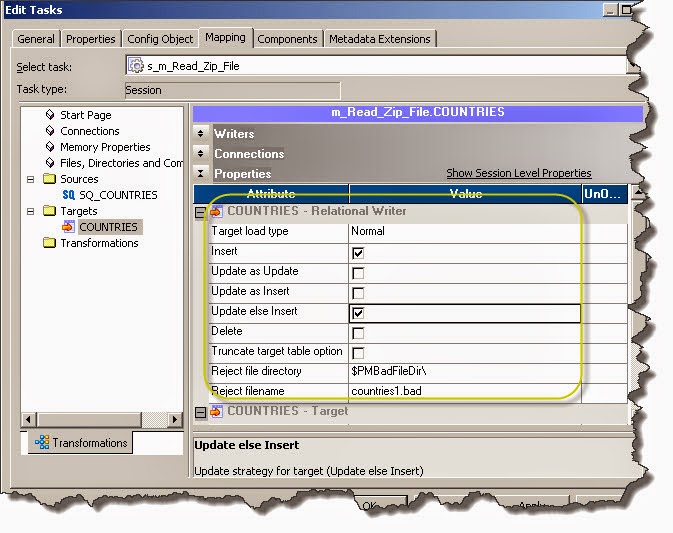

As shown in a diagram, if we right click on particular source / target or any infra object a popup will appear which will have "Dependencies.." option.

Once you click on "Dependencies" option, another window will appear. There you can choose options of Object Type which you need to search for respective object "Dependencies".

In this case, I want to find out, my source "Countries" table is getting use at what all Mappings and Workflows.. So I will uncheck rest all object types except mapping and workflows.

Once you click on Ok, your search results will appear on separate window as below

How -- Then the next questions comes is, how can we find all Workflows / Mappings etc objects which are dependent on particular source? Informatica by itself, provide you the facility to find out the dependencies.

As shown in a diagram, if we right click on particular source / target or any infra object a popup will appear which will have "Dependencies.." option.

Once you click on "Dependencies" option, another window will appear. There you can choose options of Object Type which you need to search for respective object "Dependencies".

In this case, I want to find out, my source "Countries" table is getting use at what all Mappings and Workflows.. So I will uncheck rest all object types except mapping and workflows.

Once you click on Ok, your search results will appear on separate window as below